1Cognitive Learning for Vision and Robotics Lab, USC

2Pohang University of Science and Technology

*Equal contribution

1Cognitive Learning for Vision and Robotics Lab, USC

2Pohang University of Science and Technology

*Equal contribution

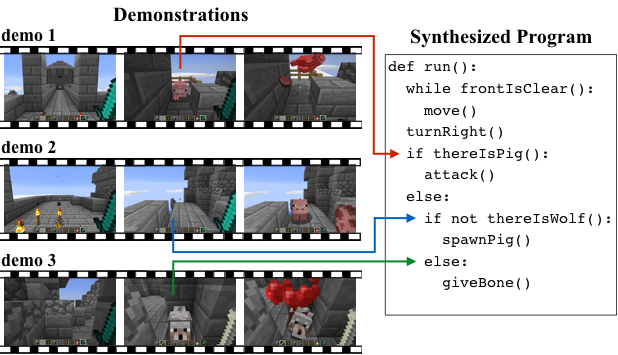

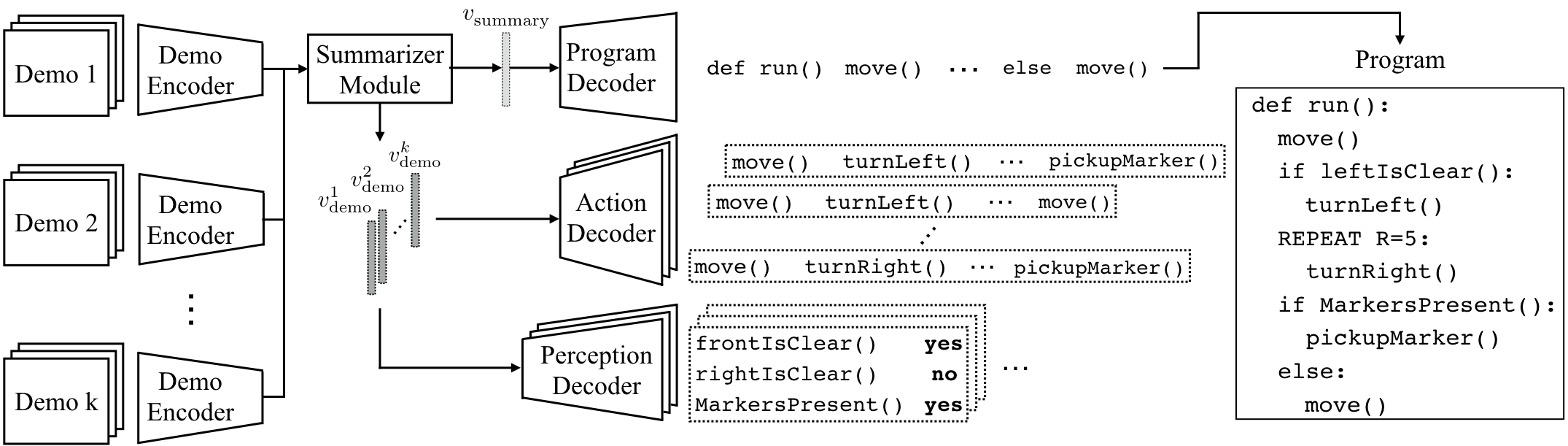

Interpreting decision making logic in demonstration videos is key to collaborating with and mimicking humans. To empower machines with this ability, we propose a neural program synthesizer that is able to explicitly synthesize underlying programs from behaviorally diverse and visually complicated demonstration videos. We introduce a summarizer module as part of our model to improve the network’s ability to integrate multiple demonstrations varying in behavior. We also employ a multi-task objective to encourage the model to learn meaningful intermediate representations for end-to-end training. We show that our model is able to reliably synthesize underlying programs as well as capture diverse behaviors exhibited in demonstrations.

A brief overview of our model.

@inproceedings{sun2018neural,

title = {Neural Program Synthesis from Diverse Demonstration Videos},

author = {Sun, Shao-Hua and Noh, Hyeonwoo and Somasundaram, Sriram and Lim, Joseph},

booktitle = {Proceedings of the 35th International Conference on Machine Learning},

year = {2018},

}

Check out some other recent work in program synthesis: